Static Site Deployment using AWS CloudFront, S3 and the CDK

Posted February 25, 2022 ‐ 7 min read

In this article we'll review how to deploy a statically-generated site to AWS. Our focus will be on the infrastructure setup rather than the deployed content. Such a setup could be used to deploy a variety of statically-generated sites including personal blogs and frontend applications.

I've recently went through the process of setting up my own site and wanted to share some best practices that I've learned along the way. The Cloud Development Kit (CDK) code described in this article is available on GitHub and is based on an existing example from AWS.

There are many great options available today to deploy a site or an application. Solutions are offered by Netlify, Cloudflare, and Vercel, just to name a few. For my site, I've chosen to use AWS simply because I feel fairly comfortable with it and use it often for work.

Content Delivery Network (CDN)

One of the main benefits of a static site is its simplicity. The site is generated once for each of its iterations and is then cached and served quickly to its users. Distribution and caching is achieved by using a Content Delivery Network, or CDN.

The CDN is comprised of many Points of Presence (PoPs) around the world, also known as edge locations. When a user requests a particular file from our site, DNS will route the query to the closest edge location. If the file is cached at that location, it will be considered a Cache Hit and will be served immediately to the user. If the file has not yet been cached, or perhaps it has already expired, the edge location will reach out to an origin server to request the file. It will then relay it to the user and cache it locally for future requests.

Caching in close proximity improves page load times and provides a better experience for our users. In addition, it reduces the load and dependency on a centralized server.

S3 Bucket as an Origin Server

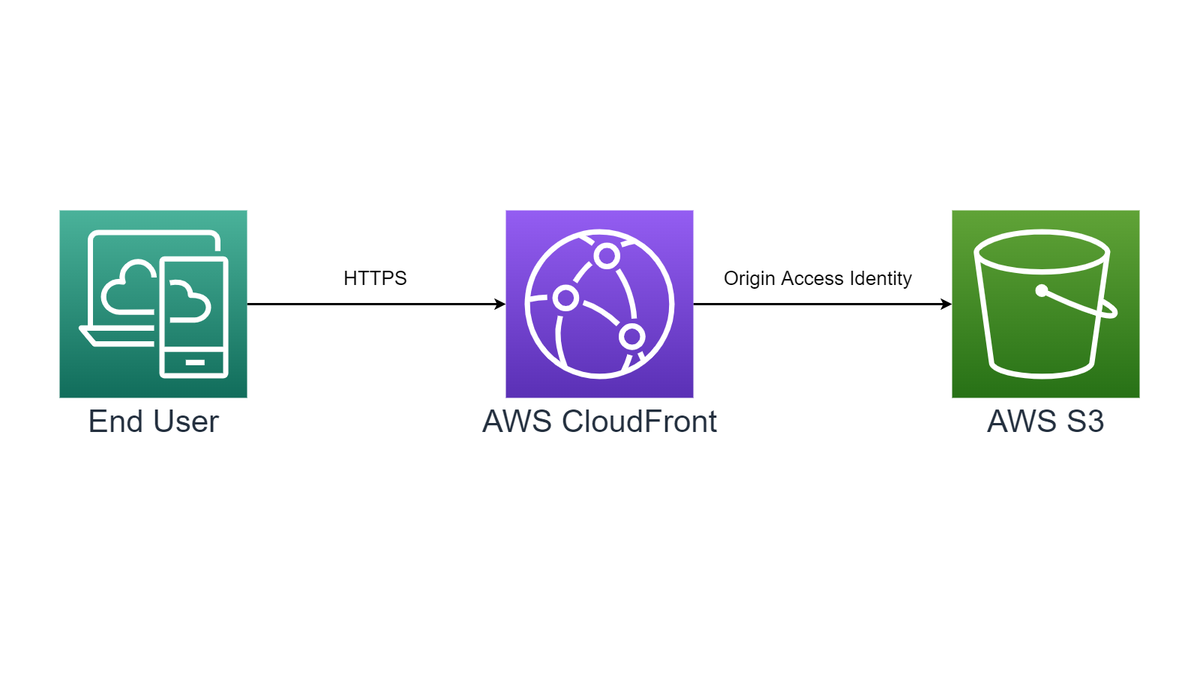

A very common setup is to use an S3 bucket as an origin server. In our implementation we set this up as shown in the following simplified diagram:

Here is how we provision our S3 bucket using the CDK and TypeScript:

const assetsBucket = new s3.Bucket(this, 'WebsiteBucket', {

publicReadAccess: false,

blockPublicAccess: s3.BlockPublicAccess.BLOCK_ALL,

removalPolicy: RemovalPolicy.RETAIN,

accessControl: s3.BucketAccessControl.PRIVATE,

objectOwnership: s3.ObjectOwnership.BUCKET_OWNER_ENFORCED,

encryption: s3.BucketEncryption.S3_MANAGED,

});

We want to make sure that our users access the site's content only through CloudFront. As can be seen in the listing above, we turn off public access to the S3 bucket. Note also that we have not turned on the static website feature of the S3 service as it does not offer the caching and distribution benefits that the CloudFront service does. We could extend this further by restricting access to content on the bucket using CloudFront signed URLs or cookies -- something we could use if we wanted to offer paid content, for example.

To allow users to access the files on the S3 bucket through CloudFront, we create a particular type of CloudFront user that is associated with our S3 bucket. This user, called the Origin Access Identitiy (OAI) user, will request files on behalf of our site's users. The OAI user is granted read access (s3:GetObject) to the bucket objects through the bucket resource policy as shown in the following code snippet:

const cloudfrontOAI = new cloudfront.OriginAccessIdentity(

this, 'CloudFrontOriginAccessIdentity');

assetsBucket.addToResourcePolicy(new iam.PolicyStatement({

actions: ['s3:GetObject'],

resources: [assetsBucket.arnForObjects('*')],

principals: [new iam.CanonicalUserPrincipal(

cloudfrontOAI.cloudFrontOriginAccessIdentityS3CanonicalUserId)],

}));

HTTPS and TLS Site Certificate

An important aspect of our site is security. HTTP requests should be redirected to HTTPS, and our site should be deployed with a TLS certificate to secure communication with the client. By using the Amazon Certificate Manager (ACM) service, we provision a public TLS certificate that is associated with our domain name as shown in the following listing:

const zone = route53.HostedZone.fromLookup(this, 'HostedZone',

{ domainName: domainName });

const certificate = new acm.DnsValidatedCertificate(this,

'SiteCertificate',

{

domainName: domainName,

hostedZone: zone,

region: 'us-east-1',

});

One prerequisite is that the account on which the site's CDK stack is deployed, manages a hosted zone that is associated with the registered domain name. This is important as the CDK will add a CNAME record to the hosted zone in order to validate domain ownership. Note also that the certificate has to be managed in the us-east-1 region which is hard-coded in the listing above.

CloudFront Functions and URL Rewrites

A very useful feature of CloudFront is the ability to run short-lived functions at edge locations. CloudFront functions run for less than 1 ms and are meant to perform simple manipulation of HTTP requests and reponses. In our example we're deploying a single CloudFront function:

const rewriteFunction = new cloudfront.Function(this, 'Function', {

code: cloudfront.FunctionCode.fromFile({ filePath: 'functions/url-rewrite.js' }),

});

The following function rewrites URLs ending with a trailing slash to include an index.html page. For example, if a user is requesting the following page: /blog/my-article/, the URL will be rewritten to /blog/my-article/index.html which is the actual object path that will be retrieved from the associated S3 bucket. This type of function is called a "viewer request" function as it is run after CloudFront has received a request from a user.

// url-rewrite.js

// See other AWS examples at https://github.com/aws-samples/amazon-cloudfront-functions/)

function handler(event) {

var request = event.request;

if (request.uri.endsWith('/')) {

request.uri += 'index.html';

}

return request;

}

Response Headers Policy - Security Headers

CloudFront functions also allow updating HTTP responses. We could write a function to add important HTTP security headers to each response, but a better way would be to configure and use a response header policy. Using a response header policy is declarative and requires no additional code.

Some headers can improve the security of your website by directing browser to behave in a certain way. For example, the HTTP Strict-Transport-Security header (HSTS) indicates to the browser to use HTTPS when performing future requests. Another important header is the Content-Security-Policy header which restricts which resources the browser can load for the page, for example, by allowing content to be loaded only from the site's origin.

We specify overrides for six common security headers in the following responser header policy:

const responseHeaderPolicy = new cloudfront.ResponseHeadersPolicy(this, 'SecurityHeadersResponseHeaderPolicy', {

comment: 'Security headers response header policy',

securityHeadersBehavior: {

contentSecurityPolicy: {

override: true,

contentSecurityPolicy: "default-src 'self'"

},

strictTransportSecurity: {

override: true,

accessControlMaxAge: Duration.days(2 * 365),

includeSubdomains: true,

preload: true

},

contentTypeOptions: {

override: true

},

referrerPolicy: {

override: true,

referrerPolicy: cloudfront.HeadersReferrerPolicy.STRICT_ORIGIN_WHEN_CROSS_ORIGIN

},

xssProtection: {

override: true,

protection: true,

modeBlock: true

},

frameOptions: {

override: true,

frameOption: cloudfront.HeadersFrameOption.DENY

}

}

});

With these security headers, check your site with Mozilla Observatory and see how it scores.

Bringing it all Together: The CloudFront Distribution

With the S3 bucket, OAI user, TLS certificate and URL rewrite function in place, let's bring this together through a CloudFront Distribution. The distribution configuration is replicated to all edge location. A distribution could register several origins as well as several behaviors where each behavior defined a particular path pattern. In the following listing we define a single default behavior which applies a path pattern that matches all requests:

const cloudfrontDistribution = new cloudfront.Distribution(this, 'CloudFrontDistribution', {

certificate: certificate,

domainNames: [domainName],

defaultRootObject: 'index.html',

defaultBehavior: {

origin: new origins.S3Origin(assetsBucket, {

originAccessIdentity: cloudfrontOriginAccessIdentity

}),

functionAssociations: [{

function: rewriteFunction,

eventType: cloudfront.FunctionEventType.VIEWER_REQUEST

}],

viewerProtocolPolicy: ViewerProtocolPolicy.REDIRECT_TO_HTTPS,

responseHeadersPolicy: responseHeaderPolicy

},

});

With the distribution in place, the final piece is to create an A record in the domain's hosted zone and point it to the CloudFront distribution:

new route53.ARecord(this, 'ARecord', {

recordName: domainName,

target: route53.RecordTarget.fromAlias(new route53targets.CloudFrontTarget(cloudfrontDistribution)),

zone

});

Deploying the Demo CDK Stack

To use the demo code accompanying this article, make sure to set the AWS region and account as well as the domain name of the site. I hope you can use the code to easily set up your own site.